Introduction

If you wear glasses, you know this feeling quite well: waking up in the morning, tapping your hand on your night table, waiting to feel the touch of something fragile. Then picking it up gently, hoping the right side is up and laying it on your nose. Opening your eyes and hoping you got it right and that you can finally see the familiar look of your bedroom. If your vision is not clear, you need to rub your eyes from the sleep dust. If that doesn’t help, you have this gut feeling that you need to go see your optometrist…

Just as we sense that our sight is not well when our vision is blurred, and we might need a new glasses prescription, it is critical for autonomous vehicles to know if their sensors lost accuracy or calibration.

Stereo cameras are particularly challenging since the cameras are calibrated compared to each other, meaning the chances of losing calibration are double. While mono-systems are not particularly influenced by small vibrations and slight movements, stereo cameras are directly impacted by these minor changes which can cause a reduction in accuracy and sometimes even complete loss of calibration. This does not mean we shouldn’t use stereo systems for self-driving cars. The advantages of stereo systems are enormous, and it is very likely that stereo vision systems will be mandatory to achieve fully autonomous driving. The technological and algorithm challenges must be solved, and the first assignment is to determine when the stereo calibration is lost and notify the DynamiCal™ processes to kick in.

Before we explain how to give a score for calibration, we’ll just mention that high accuracy DynamiCal™ is probably one of the more difficult processes to develop. At Foresight, we are proud to be part of a fairly small group of organizations that was able to achieve this huge milestone and advancement.

So, let’s dive in!

Stereo cameras that were calibrated to each other, pixel-wise, in the lab, do not stay perfectly calibrated forever. Even if the baseline they’re mounted on is rigid, the cameras might get slightly moved over time, especially if we’re talking about a car driving at a high speed. There must be a way to compute and reinsure that the cameras are always calibrated. After all, passenger lives, and health, are at stake.

When a system gets miscalibrated

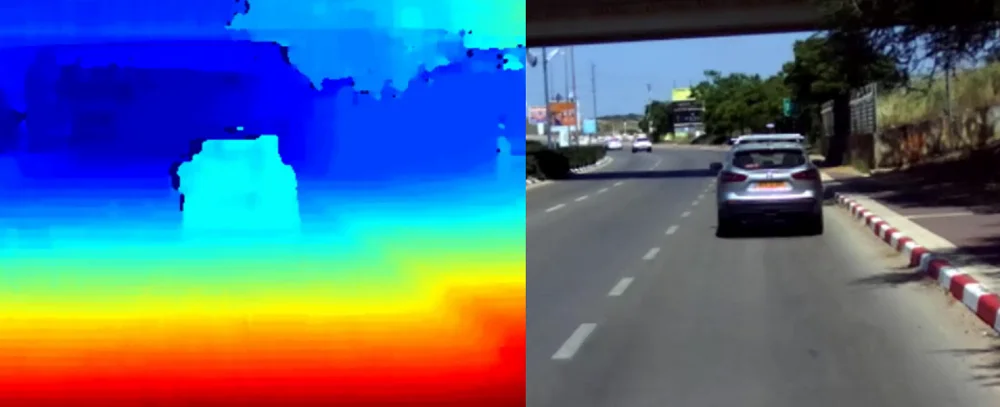

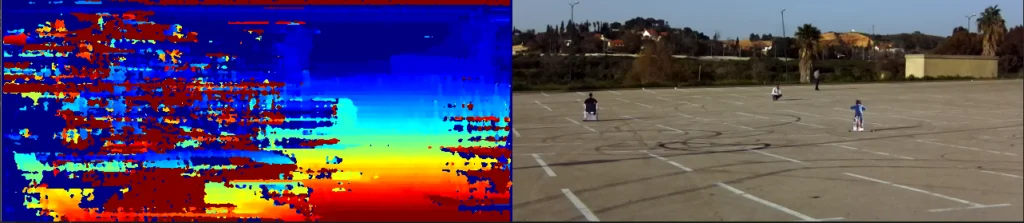

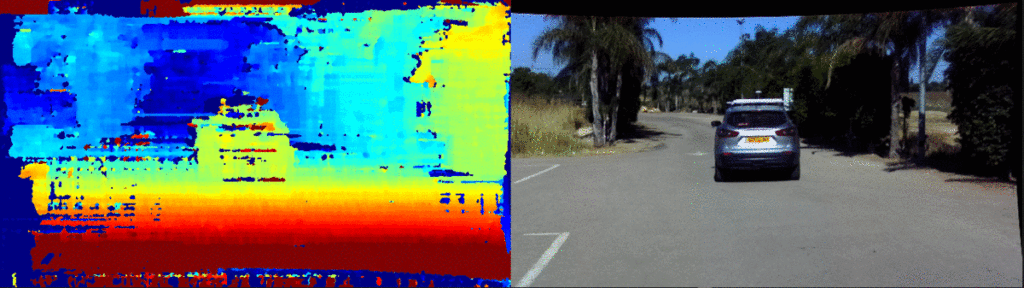

In a stereo camera system, the most valuable information acquired is the depth map of a scene (Figure 1). This depth estimation is quickly affected whenever the internal or external camera parameters change, as seen in Figure 2. Generally, if the camera optics remain untouched post-installation, the main external parameters that might be affected over time are the camera positions with respect to each other.

To understand or measure the quality of depth estimation given by a pair of stereo images, one could usually evaluate by sight the disparity between them or their alignment. Analytically, a score could be calculated to grade the level of success in the depth estimation process.

It is then possible to detect and alert when the stereo camera parameters are not calibrated by analyzing the acquired stereo images using computer vision techniques.

Approaches

Following are a few methods to tackle this problem:

- Measure the difference between the two images by remapping one to another through the calculated disparity map. This method can potentially be used also to qualify the disparity accuracy – subject to whether it is robust and good enough.

- Measure the alignment between the two images by matching features and analyzing its vertical offset (which should be always zero in case of perfect rectification).

- Use redundant sensors as reference and compare the depth maps measured by each sensor. This approach is more relevant for application as autonomous vehicles where other redundant sensors are mandatory.

- Apply DynamiCal™ methods to the images and calculate the differences between the new estimated parameters compared to the original ones.

Three main perturbations could result in bad disparity, suggesting that cameras lost calibration:

- Changes in the rotation angles between the stereo images

- Changes in the brightness/contrast between the stereo images

- Occlusions between the stereo images – meaning that a part of one image is visually blocked by an object which doesn’t block the same area in the other image.

When calculating the calibration score, the approach used should consider the above perturbations. Not all the methods are robust enough to overcome them. Depending on the application, a combination of different methods might be the recommended approach

Test case – autonomous vehicles

One of the largest and promising applications is, of course, autonomous vehicles. As mentioned above, due to the immense complication of corner cases and that it’s a matter of human life, fully self-driving cars will probably be equipped with stereo vision systems.

We compared two stereo system setups; (a) a rigid base on which both cameras are well mounted on, and (b) two separate camera modules that were attached to the vehicle roof by magnets.

Both setups were taken for a test drive on a standard urban steep and the results are as follow:

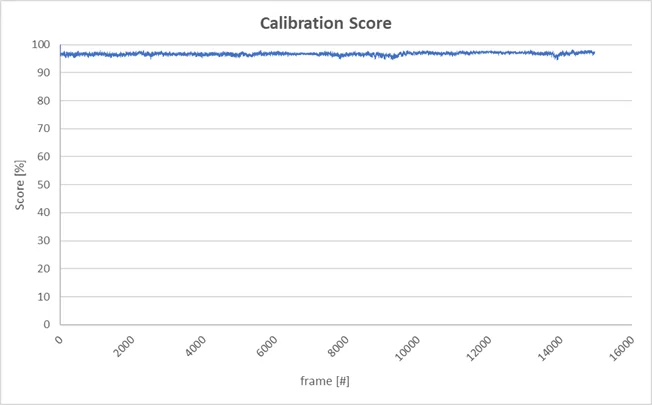

The stereo system with the rigid base held a very high calibration score, i.e. good calibration, for each of the frames as expected (Figure 3).

In contrast, the system where the cameras were installed independently, measuring the calibration score unveiled very low values whenever a small bump or pothole on the road caused the cameras to slightly move. This can easily happen in a regular driving environment as shown in Figure 4.

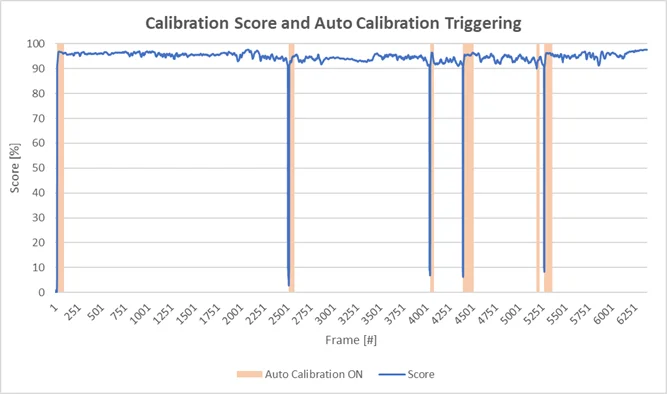

Figure 5 shows the measuring of calibration score, detecting calibration loss (indicated by a score of 90% or less), and triggering Foresight’s DynamiCal™ algorithm, which in turn re-calibrates the system, thus increasing the calibration score back to higher levels.

Conclusion

Our test illustrates the necessity of DynamiCal™ technology for a safe and robust stereo vision system for autonomous vehicles. Foresight, as a leading innovator in stereo technology, has unique capabilities and technological breakthroughs to lead the world to the next level of autonomous vehicles.