Perceiving the world in 3D is vital. This is probably why humans have two eyes. If this is true for the human brain shouldn't this apply to autonomous vehicles as well?

Cameras are the most precise instruments available to capture accurate data at high resolution. As with human eyes, cameras capture the resolution, small details, and vividness of a scene with such detail that no other sensors, including radar, ultrasonic, and lasers, can match. It has always been an object of appreciation and admiration when a person can draw or paint a scene from a true perspective as human eyes capture the world around us.

The next engineering challenge and some say one of the most significant revolutions humanity will go through, is real-time intelligent machine vision and 3D perception. Smart machine vision applications include real-time medical surgical robots, smart airborne delivery, homeland security, and warfare, to name but a few. Cars that are driven by autonomous artificial intelligence (AI) with no need for a driver cabin or steering wheel top the list of applications. As of now, most efforts in the autonomous vehicle (AV) industry are focused on advanced driver assistance systems (ADAS) since that is the first step for fully self-driving cars. The backbone of all ADAS applications is cameras, usually monocular or stereo vision systems. Regardless of the method, cameras are the basis for safe autonomous cars that can “see and drive” themselves.

Many of these applications can be implemented by using a vision system composed of forward, rear, and side-mounted cameras for pedestrian detection, traffic sign recognition, blind spots, and lane-detect systems. Other features, such as adaptive cruise control (ACC), can be implemented robustly as a fusion of radar or LiDAR data with cameras, usually for non-curvy roads and higher speeds.

All real-world scenes that a camera encounters are three-dimensional. Objects that are at different depths in the real world may appear to be adjacent to each other in the two-dimensional mapped world of a camera sensor.

For example, in the above images, the toy car in the foreground is closer to the camera than the storage shelf in the background. But how much closer is it? That depends entirely on the angle and distance at which the 2D image was taken. To better understand this concept, note the difference in perspective between the images and how the car seems to be getting farther and farther when changing the distance, angle, or height of the camera alone.

The human brain has the power of perspective that allows us to make decisions regarding depth from a 2D scene. For a forward-mounted camera in the car, the ability to analyze perspective is not so easy.

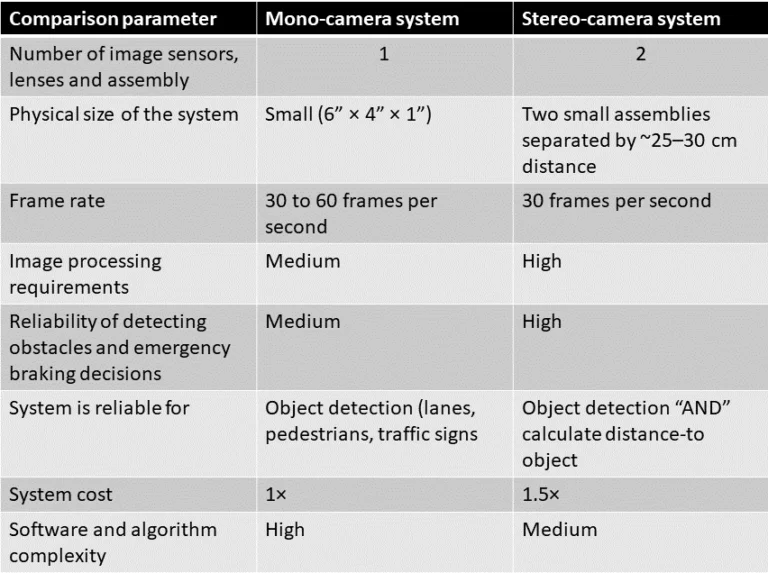

A single-camera sensor capturing video that needs to be processed and analyzed is called a monocular (single-eyed) system, or a mono system. A system with two cameras separated from each other is called a stereo-vision system. In general, the mono systems used in ADAS and AV applications are smaller in size since they have only one camera module, whereas stereo systems require two small assemblies separated from each other. The baseline (the distance between the two cameras) can range from a few centimeters up to a few meters, depending on the requirements. In addition, the image processing requirements for a mono system are not as demanding as for a stereo system. The table below compares the basic attributes of a monocular camera-based ADAS with a stereo camera-based ADAS.

The mono camera video system can do many things reasonably well. The analytics behind it can identify lanes, pedestrians, traffic signs, and other vehicles in the path of the car, all with good accuracy. However, the mono system is not as robust and reliable in calculating the 3D view of the world from the planar 2D frame that it receives from the single camera sensor. Perceiving the world in 3D is essential and this is probably why humans and most advanced animals are born with two eyes.

So, what are the challenges (and opportunities) of stereo vision systems for autonomous vehicles?

Let’s simplify how stereo vision systems work:

Calculating distance to an object is achieved by finding the projection difference of the object on two stereo cameras. The number of pixels a particular point has moved in the right camera image compared to the left camera image is defined as disparity. When two stereo cameras are calibrated to each other, the disparity measured is equivalent to the distance of the object from the cameras.

The maximum distance range and depth accuracy of stereo systems depend on many parameters. The three most important parameters are probably the following:

- Focal length of cameras

- Baseline

- Pixel size (and density) of each camera

This brings us to the first challenge-

Physical and optical constraints:

- A smaller pixel size increases distance range but requires more computational resources.

- A larger distance between two cameras increases distance range and accuracy but requires a rigid baseline which is difficult to maintain.

- A larger focal length increases distance range but narrows down the field of view (FOV).

Since the AV system needs to be mounted on a vehicle, the baseline is limited by the size of the vehicle. A narrow FOV affects the detection capabilities of the system for objects that are slightly angled to the direction of the driving vehicle.

Computation:

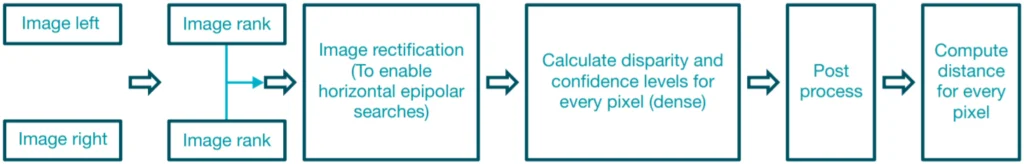

The process a stereo vision system goes through is shown below in a high-level block diagram:

The computation and memory requirements for the disparity calculations are extremely heavy. In fact, most general-purpose processors are barely enough for performing such calculations in real time at high standards for ADAS or AV purposes. Adding higher levels of algorithms such as AI for lane-keeping and classification requires even greater performance capabilities. What this means is that designing a real-time AV stereo vision system requires specialized hardware.

Nowadays, with the ever-evolving processors with dedicated hardware accelerator at reasonable prices, the task of bringing a high-performance stereo system becomes a viable option to consider.

Reliability:

The primary purpose of ADAS and autonomous vehicles is to avoid or at least drastically reduce the frequency of road accidents. ADAS and AV vision systems must estimate distances correctly with high robustness and alert for a possible collision, but also minimize the false positive scenarios. This requires the system to be designed and developed for the highest levels of robustness and use unique electrical systems that withstand the automotive safety applications.

Foresight has been developing stereo visions systems for years, using technology and methods of the highest standards, based on homeland security applicational knowledge.

The QuadSight 2.0™ system, a first-of-its-kind quad-camera multi-spectral vision solution, is driven by proven, advanced image processing algorithms and AI capabilities. The system is a seamless fusion of 4 cameras – 2 pairs of stereoscopic long-wave infrared (thermal) and visible-light cameras, enabling highly accurate and reliable object detection under the most challenging lighting and weather conditions.

This is the future of autonomous vehicles.

Further Reading: ADAS accurate 3D perception